Google Cloud

What is Google Cloud?

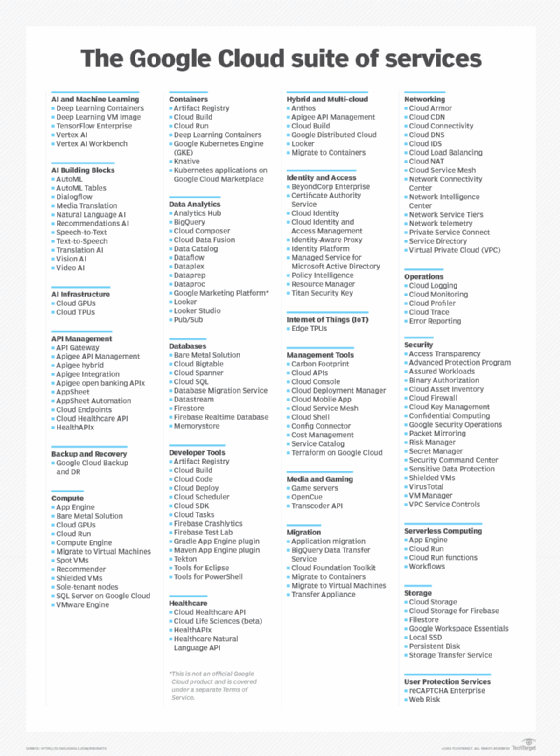

Google Cloud is a suite of public cloud computing services offered by Google. The platform includes a range of hosted services for compute, storage and application development that run on Google hardware. Google Cloud services can be accessed by software developers, cloud administrators and other enterprise IT professionals over the public internet or through a dedicated network connection.

Overview of Google Cloud offerings

Google Cloud offers services for compute, storage, networking, big data, machine learning and IoT, as well as cloud management, security and developer tools. Some of the cloud computing products in Google Cloud include the following:

- Google Compute Engine, which is an infrastructure as a service (IaaS) offering that provides users with VM instances for workload hosting.

- Google App Engine, which is a platform as a service (PaaS) offering that gives software developers access to Google's scalable hosting. Developers can also use an SDK to develop software products that run on App Engine.

- Google Cloud Storage, which is a cloud storage platform designed to store large, unstructured data sets. Google also offers database storage options, including Cloud Datastore for NoSQL nonrelational storage, Cloud SQL for MySQL fully relational storage and Google's native Cloud Bigtable database.

- Google Kubernetes Engine (GKE), which is a management and orchestration system for Docker container and container clusters that run within Google's public cloud services. Google Kubernetes Engine is based on Kubernetes, Google's open source container management system.

- Google Cloud's operations suite, formerly Stackdriver, which is a set of integrated tools for monitoring, logging and reporting on the managed services driving applications and systems on Google Cloud.

- Serverless computing, which provides tools and services for event-based workload execution, such as Cloud Functions for creating functions that handle cloud events, Cloud Run for managing and running containerized applications and Workflows to orchestrate serverless products and APIs.

- Databases, which is a suite of database products delivered as completely managed services, including Cloud Bigtable for large-scale, low-latency workloads; Firestore for documents; CloudSpanner as a highly scalable, highly reliable relational database; and CloudSQL as a fully managed database for MySQL, PostgreSQL and SQL Server.

Google Cloud offers application development and integration services. For example, Google Cloud Pub/Sub is a managed and real-time messaging service that allows messages to be exchanged between applications. In addition, Google Cloud Endpoints enables developers to create services based on RESTful APIs and then make those services accessible to Apple iOS, Android and JavaScript clients. Other offerings include Anycast DNS servers, direct network interconnections, load balancing, monitoring and logging services.

Higher-level services

Google continues to add higher-level services, such as those related to big data and machine learning, to its cloud platform. Google big data services include those for data processing and analytics, such as Google BigQuery for SQL-like queries made against multi-terabyte data sets. In addition, Google Cloud Dataflow is a data processing service intended for analytics; extract, transform and load; and real-time computational projects. The platform also includes Google Cloud Dataproc, which offers Apache Spark and Hadoop services for big data processing.

For AI, Google offers its Cloud Machine Learning Engine, a managed service that enables users to build and train machine learning models. Various APIs are also available for the translation and analysis of speech, text, images and videos.

Google also provides services for IoT, such as Google Cloud IoT Core, which is a series of managed services that enables users to consume and manage data from IoT devices. Edge TPU provides dedicated hardware designed to accelerate machine learning and AI at the IoT edge.

Google Cloud provides an array of tools designed to assist with data and workload migrations. Examples include Application Migration to the cloud, BigQuery Data Transfer Service for scheduling and moving data into BigQuery, Database Migration Service to enable easy migrations to Cloud SQL, Migrate for Anthos to help migrate VMs into containers on GKE, Migrate for Compute Engine to bring VMs and physical servers to Compute Engine, and Storage Transfer Service to handle data transfers to Cloud Storage.

The Google Cloud suite of services is always evolving, and Google periodically introduces, changes or discontinues services based on user demand or competitive pressures. Google's main competitors in the public cloud computing market include AWS and Microsoft Azure.

Google Cloud pricing options

Like other public cloud offerings, most Google Cloud services follow a pay-as-you-go model in which there are no upfront payments and users only pay for the cloud resources they consume. Specific terms and rates, however, vary from service to service.

Discounts might be available for some services with long-term commitments. For example, committed use discounts on Compute Engine resources such as instance types or GPUs can yield more than 50% discounts. Google Cloud adopters should consult with Google sales staff and in-house cloud architects and use cloud pricing estimation tools, such as Google Cloud Pricing Calculator, to estimate the pricing of prospective cloud deployments.

Google Cloud competitors

Google Cloud faces strong competition from other public cloud providers -- leading this competitive landscape are AWS and Microsoft Azure.

- AWS is the oldest and most mature public cloud that emerged as a public service in 2006. It typically offers the broadest range of general tools and services, and it possesses the largest market share by appealing to a broad customer base ranging from individual developers to major enterprises to government agencies.

- Microsoft Azure appeared in 2010 and has proven particularly attractive to Microsoft-based environments. This has made it easier to transition workloads from data centers to Azure, and even build hybrid environments. Azure is the second-largest public cloud, often catering to larger enterprise users.

- Google Cloud also appeared in 2010 and is currently the smallest of the three major public clouds. However, Google Cloud has developed a strong reputation for its compute, network, big data and machine learning/AI services.

But the differences between providers are eroding as all three public clouds are evolving to offer similar suites of services and capabilities. As an example, Google Cloud's Config Connector used for app modernization is matched by AWS Controllers for Kubernetes and Azure Service Operator. There are only a handful of Google Cloud services not matched by an AWS and/or Azure analog. As examples, Google Cloud's Binary Authorization service for container security and the Error Reporting tool for software developers currently have no matching services from AWS or Azure.

Cloud adopters should carefully investigate and experiment with the suite of services provided by each cloud provider before committing to a particular platform, though multi-cloud environments are increasingly common among enterprise users.

Google Cloud certification paths

Public clouds can offer hundreds of individual services, enabling users to assemble comprehensive cloud infrastructures capable of deploying, securing and monitoring complex enterprise workloads. Effective use of cloud services, therefore, depends heavily on the users' knowledge and expertise surrounding those offerings. This has driven the need for cloud training and certification, and Google offers training programs and certifications related to Google Cloud.

Training options offer free or low-cost onramps to Google Cloud services and approaches. Cloud users can explore a range of training options including the following:

- cloud infrastructure

- application development

- Kubernetes, hybrid and multi-cloud

- data engineering and analytics

- API management

- networking and security

- machine learning and AI

- cloud business leadership

- Google Workspace

Google also promotes and endorses certifications for cloud users that choose to validate their expertise on a professional level. Certification paths are typical for cloud professionals as part of ongoing professional development or as a requirement for professional cloud employment. Certifications are also used by employers as vital benchmarks in measuring the capabilities and knowledge levels of prospective candidates for cloud-related jobs. There are currently three levels of Google cloud certification.

- Foundational certification. This is the introductory certification that conveys a wide range of basic knowledge and concepts of Google Cloud resources, tools and services. This certification is suited to new or otherwise non-technical cloud users with little (if any) experience with Google Cloud.

- Associate certification. This is the main practical certification for Google Cloud, allowing users to focus on cloud issues such as deployment, monitoring and maintenance of workloads running in Google Cloud. This certification is suited for Cloud Engineer roles. Many professionals will include an Associate certification along the educational path to professional certification.

- Professional certifications. These are the top-tier certifications for Google Cloud and validate advanced concepts and skills in design, implementation and management within Google Cloud. Participants seeking a Professional certification should have at least three years of industry experience (including at least one year of hands-on experience with Google Cloud). Professional certifications currently cover eight specializations including Cloud Architect, Cloud Developer, Data Engineer, Cloud DevOps Engineer, Cloud Security Engineer, Cloud Network Engineer, Collaboration Engineer and Machine Learning Engineer.