cloud bursting

What is cloud bursting?

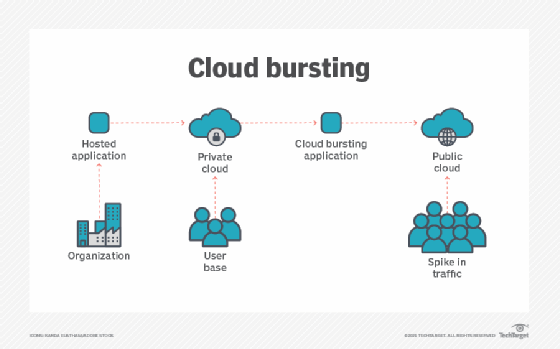

Cloud bursting is an application deployment technique in which an application runs in a private cloud or data center and bursts into a public cloud when the demand for computing capacity spikes. This deployment model gives an organization access to more computing resources when needed.

When compute demand exceeds the capacity of a private cloud, cloud bursting gives an organization additional flexibility to deal with peaks in IT demand. In addition, cloud bursting frees up local resources for other critical applications.

The advantage of a hybrid cloud deployment model like cloud bursting is that an organization only pays for extra compute resources when they are needed.

The private cloud is the primary means of deployment in a cloud bursting model, with public cloud resources being used in times of increased traffic. When a private cloud reaches its resource capacity, overflow traffic is directed toward a public cloud without service interruption. Once reduced to normal traffic levels, data is moved back to the private cloud. Cloud bursts can be triggered either automatically based on high usage demands or manually via a request.

When using cloud bursting, an organization should keep its level of security, along with any platform compatibilities and compliance requirements, in mind. Because private clouds are generally more protected than public clouds, critical applications or data are not recommended for cloud bursting, since that data will transition between clouds.

How does cloud bursting work?

IT administrators help establish capacity thresholds for applications in the private cloud. When workload capacity nears its threshold, the used application automatically switches over into the public cloud and traffic is pointed toward it. Once the spike in resource demands diminish, the application is moved back to the private cloud or on-premises infrastructure.

An organization can take one of the following approaches to cloud bursting:

- Distributed load balancing. With distributed load balancing, applications operate between a public cloud and a data center. When traffic hits its predefined threshold, an identical environment redirects workload traffic to a public cloud. This method needs an application to be deployed locally and in the public cloud and requires load balancing operations to share traffic.

- Automated bursting. Automated bursting requires an organization to set policies to define how bursting is handled. Once set, an application hosted in a private cloud can automatically burst over into a public cloud. Software is used to automatically switch the application over. This helps an organization provision cloud resources exactly when needed without delay.

- Manual bursting. Manual bursting enables an organization to manually provision and deprovision cloud services and resources. Manual cloud bursting is suitable for temporary large cloud deployments, when increased traffic is expected or to free up local resources for business-critical applications.

When does an organization need cloud bursting?

Cloud bursting is recommended for high-performance, noncritical applications that handle nonsensitive information. An application can be deployed locally and then burst to the cloud to meet peak demands, or the application can be moved to the public cloud to free up local resources for business-critical applications. Cloud bursting works best for applications that do not depend on a complex application delivery infrastructure or integration with other applications, components and systems internal to the data center.

When considering cloud bursting, an organization must also examine security and regulatory compliance requirements. For example, cloud bursting is often cited as a viable option for retailers that experience peaks in demand during the holiday shopping season. However, cloud computing service providers do not necessarily offer an environment compliant with the Payment Card Industry Data Security Standard, and retailers could be putting sensitive data at risk by bursting it to the public cloud.

Cloud bursting is also useful for software development and analytics, as well as big data modeling and marketing campaigns. For example, organizations that handle big data or machine learning can use cloud bursting to generate models that exceed their private cloud capacity. An organization can also use cloud bursting alongside a marketing campaign if they are expecting a large influx of traffic as a result. Cloud service providers Amazon Web Services, Google Cloud and Microsoft Azure can support cloud bursting.

The benefits of cloud bursting

The main benefits of cloud bursting include:

- Cost. An organization only pays for extra compute resources when needed. Likewise, private cloud infrastructure costs can be kept low by maintaining only minimal resources.

- Flexibility. Cloud bursting can quickly adjust to cloud capacity needs. It also frees up private cloud resources.

- Business continuity. An application can burst over into the public cloud without interrupting its users.

- Peaks in traffic. If an organization is expecting a sudden increase in traffic, like during a holiday, cloud bursting can be used to facilitate any expected or unexpected peaks in compute resource demands.

The challenges of using cloud bursting

Cloud bursting does not come without its challenges, however. These include:

- Security. If a public cloud is attacked, then an adjacent organization's data can be at risk.

- Data protection. It may be difficult to keep backups consistent when they are fed from multiple sources.

- Networking. Organizations may find it difficult to build low-latency and high-bandwidth redundant connections between public and private clouds.

Other issues related to cloud bursting arise from the potential for incompatibility between the different environments and the limited availability of management tools. Cloud computing service providers and virtualization vendors have developed tools to send workloads to the cloud and manage hybrid environments, but they often require all environments to be based on the same platform. These challenges typically lead to few companies being able to deploy cloud bursting architectures.

Learn more about how cloud bursting works and why the process is often called "the myth of cloud bursting."