Top public cloud providers of 2024: A brief comparison

How do AWS, Microsoft and Google stack up against each other when it comes to regions, zones, interfaces, costs and SLAs? We break down the features of these cloud providers.

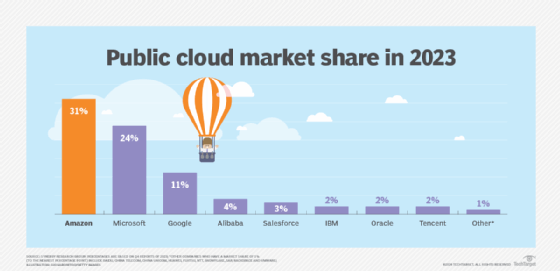

The public cloud marketplace consists of numerous cloud providers. Amazon, Microsoft and Google collectively accounted for 67% of the total 2023 cloud market in the fourth quarter, according to Synergy Research Group. The remaining public cloud market is divided among Alibaba, Salesforce, IBM, Oracle and and several smaller players. This article examines cloud offerings from Amazon, Microsoft and Google.

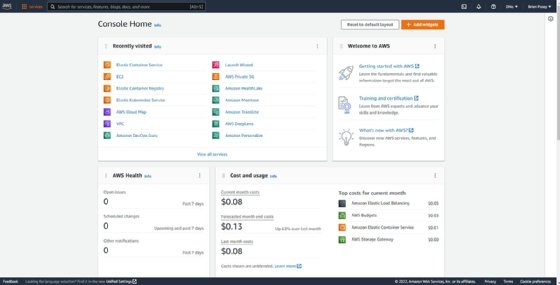

1. AWS

AWS, shown in Figure 1, is one of the largest cloud providers. The AWS cloud consists of more than 200 individual services. AWS earned $90.8 billion in revenue in 2023, according to Statista, though the rate of growth has decreased dramatically over the last couple of years.

SLA

Amazon doesn't provide a standardized uptime commitment or service-level agreement (SLA) across the entire AWS cloud, but rather provides SLAs for each individual service. In the case of EC2 -- Amazon's platform for hosting VM instances -- Amazon offers a regional-level SLA of 99.99% and an instance-level SLA of 99.5%. The company provides a tiered system of service credits, where the service credit percentage is tied to the duration of an outage, with longer outages eligible for larger service credits.

Regions and zones

One of the most important considerations when evaluating a cloud provider is the number of global regions and availability zones offered. Amazon currently consists of 33 regions around the globe and has a total of 105 availability zones.

Interface

Another important factor when selecting a public cloud are the options for interacting with cloud resources. Cloud providers, such as Amazon, Microsoft and Google, make SDKs available to developers so applications can more easily use cloud resources. From an administrative standpoint, the various cloud providers offer both a web portal and CLI. Amazon's management portal for AWS is shown in Figure 1. The command-line environment, known as AWS CLI, can be installed on Windows, macOS or Linux. Although the supported commands largely resemble those used in Linux environments, Amazon also offers a PowerShell version of AWS CLI.

Pricing

Like the competing clouds, Amazon uses a pay-as-you-go model for its AWS cloud resources. The formulas used to calculate usage costs are quite complex, but Amazon offers a pricing calculator that can help determine overall costs. Amazon also makes some resources available in a free tier. These free-tier resources tend to be helpful to those who want to learn how the various AWS services work without investing a lot of money.

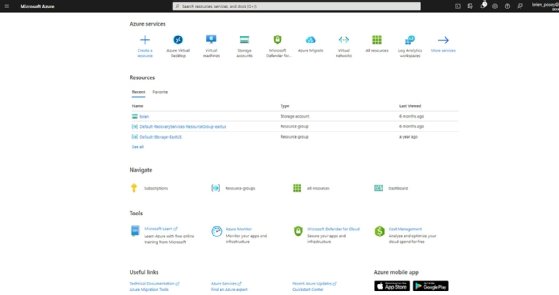

2. Microsoft Azure

Microsoft Azure, shown in Figure 2, is widely considered the world's second-largest cloud. The most current numbers that are available from the fourth quarter of 2023 indicated that Microsoft earned over $111 billion in revenue in 2023 from its cloud services. However, those revenues are tied not only to Azure, but to cloud services such as Microsoft 365. Azure is estimated to account for more than 50% of these earnings.

SLA

Like AWS, Microsoft has a separate SLA for each individual service within its Azure cloud. Microsoft's uptime guarantee for Azure VMs is similar to Amazon's SLA. VMs consisting of at least two instances and deployed across two availability zones are guaranteed to have 99.99% availability. That drops to 99.95% availability if the instances are in the same availability zone. The SLA for single-instance VMs varies depending on the hardware configuration, but all VM instances are guaranteed to have at least 95% availability. Like Amazon, Microsoft offers credits when SLAs aren't met.

Regions and zones

Microsoft maintains numerous regions and availability zones within the Azure cloud. These availability zones are separate data centers within a region, with a round-trip latency within the region of less than 2 milliseconds. Microsoft currently offers 60-plus regions with multiple availability zones for each.

Interface

The Microsoft Azure portal, shown in Figure 2, is relatively easy to navigate, and most Azure services and resources can be managed from within the portal without having to delve into the command-line environment. For those who would prefer to use a command-line environment, Microsoft offers Azure CLI. Like AWS CLI, Azure CLI can be installed on Windows, Linux or macOS. There's also an option to run Azure CLI in Docker or in Azure Cloud Shell. Like AWS CLI, Azure CLI uses Linux-style commands. Microsoft also offers Azure PowerShell, which is essentially a PowerShell module that can be used to manage Azure resources from a PowerShell session.

Pricing

Microsoft claimed "AWS is up to five times more expensive than Azure for Windows Server and SQL Server." Microsoft further stated that running Windows VMs on Azure can yield up to a 71% savings over running those VMs on Amazon EC2. The company made similar claims about SQL managed instances and SQL Server VMs with savings of 85% and 45%, respectively. Ultimately, the amount paid depends on several factors. Microsoft provides a pricing calculator to estimate the cost of running various workloads on Azure.

3. Google Cloud

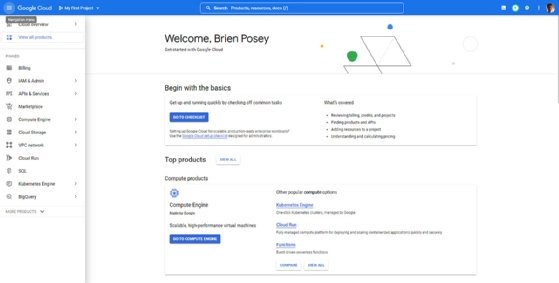

Google Cloud, shown in Figure 3, is the third-largest cloud platform and generated $33.09 billion in 2023, accounting for over 10% of Google's total revenues, according to Statista.

SLA

Like Microsoft and Amazon, Google ties its SLAs to specific services. In the case of Google Compute Engine, the SLA varies based on tier. A single instance in the Premium Tier is guaranteed to have an uptime of at least 99.9%. It is also 99.99% for instances in multiple zones. Google also offers a financial credit when it fails to meet its SLAs.

Regions and zones

Google has an impressive number of data centers in the Asia-Pacific region, North America, South America, Europe and the Middle East. Collectively, these account for the company's 40 regions and over 120 availability zones. There are approximately three dozen availability zones in North America alone.

Interface

In addition to the web portal shown above, Google offers a command-line environment known as gcloud CLI. It supports Linux-style commands and can be installed on Linux, macOS or Windows. There are also downloads available for Debian, Ubuntu, Red Hat, Fedora and CentOS.

Pricing

Google offers the same type of pay-as-you-go pricing as other providers. Those who wish to try out Google Cloud can receive up to $300 in free credits and access to more than 20 products. Because cloud pricing tends to be complex, Google provides a pricing calculator that can estimate what it will cost to run various workloads in Google Cloud.

Brien Posey is a 15-time Microsoft MVP with two decades of IT experience. He has served as a lead network engineer for the U.S. Department of Defense and as a network administrator for some of the largest insurance companies in America.