Fotolia

Techniques for optimizing microservice performance in the cloud

Learn how to prevent bottlenecks, create efficient deployment and avoid security issues to ensure optimal microservice performance in the cloud.

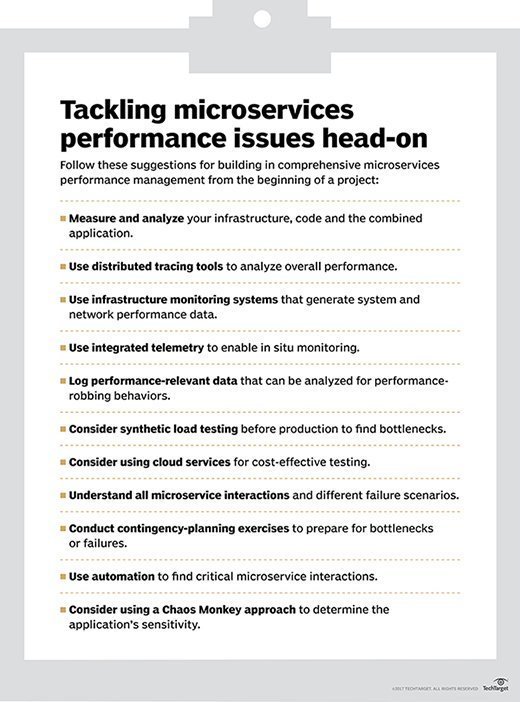

The more a business expects to depend on microservices for software development, the more critical it is that microservice performance be tuned to optimality. At the least, development teams will need to balance microservice performance issues with other benefits like service reuse. Getting this right means understanding the multiple models of microservice binding available, dealing with security and governance in the most efficient way, and structuring your deployment and network connectivity to prevent bottlenecks in all stages of the operational lifecycle.

A microservice, at the highest level, is a software component that can be reused freely by multiple applications, and that can be scaled and moved as needed. These attributes strongly suggest that microservices are "stateless," meaning that they don't hold information between activations, so any copy of one can process a message with the same result. Performance can also be impacted by API management tools that mediate between a request for a microservice and the microservice itself. Both of these have to be considered at the same time.

When you bind a microservice into an application, there are two basic choices. , you can bind a unique copy of the microservice with other logic to form a deployment unit. This doesn't inhibit its reuse, obviously, and if the deployment unit is scalable, then the definition above is satisfied. Second, you can share a copy of the microservice among the applications or components being run. This means that the workflow to or from the microservice will have to move through the network, which means that its performance may vary depending on just where the microservice is hosted relative to its users. The option of incorporating microservices into each deployable unit doesn't require optimization, so if it's used, no performance tuning is required. It's the second case that has to be explored.

Getting into microservice performance tuning

The biggest issue to explore in performance tuning is what could be called the combined application topology, meaning where the components of all the applications that share microservices are hosted, relative to each other and to the microservices. The application topology will be determined in most cases by the geographic distribution of the hosting facilities. What you'll need to do for microservice performance optimization will depend on whether hosting facilities are widely or narrowly distributed.

When hosting is narrowly distributed (the limiting case being that everything is hosted in the same data center) then performance optimization will involve only optimizing hosting performance and local data center switching. The general rule is to use the fastest possible network switches to link servers and to avoid switch configurations that could create traffic bottlenecks depending on the server-to-server path taken to access a shared microservice. Where considerable numbers of microservices are used, or where considerable microservice traffic is expected, consider fabric switching within the data center. This control of local switching is particularly important where API managers apply security and compliance policies, because these managers add a connection hop to the workflow.

Where data center hosting is distributed, the problem is that there are many possible places where components of applications, including microservices, might be distributed, especially considering the possibility of scaling or fail-over. Some of these could excessive delay, so the step is to determine just what the delay could be worst-case. Modeling or testing can determine whether placing a microservice in the least-optimum (generally, furthest geographic) server relative to the applications that use it will create objectionable impact on quality of experience (QoE). If it doesn't, then your data centers are effectively centralized and you needn't worry unless the hosting configuration options change.

Where worst-case microservice placement does create QoE problems, you have two options: constrain placement or improve connection performance. Each of these can have benefits and present challenges, so look at your approach carefully before you start.

Ways to improve performance connection

Improving connection performance means either reducing the latency on connections between data centers, the path that microservice workflows take or making compensatory improvements in user-to-application connectivity to offset the additional microservice workflow delays. The latter would mean speeding up the virtual private network overall, which usually isn't an option, so focus on whether the cost of the improved data center interconnection (DCI) facility would be justified.

If you can't improve connection performance, you'll have to constrain where microservices are placed. If there are a few hosting locations that have better connectivity with other hosting points, hosting all microservices in these natural hubs will improve performance overall. You may also be able to make selective improvements in DCI performance to create such a natural hub point.

The alternative to this is to "" the rule of microservice sharing. Because any copy of a microservice can handle work equally well -- and because scaling microservices under load involves spinning up instances of the microservices anyway -- you may want to deploy several copies of a given microservice, each serving a specific user community or group of applications.

If every application or user community is considered separately when where to host microservices, it's possible that even widely distributed hosting points can be accommodated at acceptable QoE by picking an optimum place (or range of places) to host a dedicated set of "community microservices." As long as application lifecycle management processes are tuned to insure these copies never get out of sync, the benefits of microservices are preserved.

The key point in microservice performance management in the cloud is that "faster stuff" alone won't always be the best answer or even a useful answer. Microservices are flexible in their application, and you'll need to be creative in exploiting their capabilities to get the best performance at the lowest cost.